Should the cars of the future be psychopaths?

Automation features in vehicles are becoming more widespread. Self-driving cars are no longer seen as a wildly futuristic concept, and the technology behind them is quickly evolving. While you won’t see any fully autonomous cars on the roads just yet, the transition from human-navigated vehicle to automated vehicle is probably something we will get to witness during our lifetimes. For now, however, there are still some technical challenges that must be faced.

But let’s ignore reality for a second, and enter a world where the perfect automated vehicle already exists. This imaginary vehicle uses state-of-the-art artificial intelligence (AI) to navigate through complex environments and adapt to ambiguous situations. Assuming this car is flawless, it would nonetheless be vulnerable to external factors, as the outside world still provides dangers that could lead to accidents. This would be especially true if this car were to share the roads with less technologically advanced vehicles (that is, vehicles driven by humans), which would likely be the case for at least some years.

“Let’s ignore reality for a second, and enter a world where the perfect automated vehicle already exists.”

The age of Artificial Intelligence

In this future, self-driving cars will drive millions upon millions of kilometers. Purely based on probability, at least some of them will be exposed to some truly odd, extraordinary scenarios. For instance, let’s assume such a car was to face a situation where it could preserve the lives of 10 people at the cost of the driver’s life. To exemplify, the car may have to choose between driving into a group of pedestrians or crashing into a concrete wall. Due to its enormous computing power and constant monitoring of the streets, the AI should hypothetically be able to make a cognizant decision. But which one should it be taught – or programmed – to take by its creators? The utilitarian approach, slamming into the wall, sacrificing one for the benefit of many? Sounds reasonable – however, that would be a tough sell for the car manufacturer, considering they are expected to keep their customer as safe as possible. But the alternative option – a self-driving vehicle destroying a group of innocent pedestrians to save its own occupant – wouldn’t make for great marketing either.

If a human driver was behind the wheel and had to make such a decision, whichever option he or she opted for could be blamed on a lack of time to process the situation, or perhaps on an inherent self-preservation instinct. In any case it would probably be understood that this was an extremely difficult situation to have to make a quick life-or-death decision in. This would arguably not be the case with a fully-automated vehicle, whose processing capacity and decision speed (at least with regard to traffic situations) may actually exceed our own, and any death caused could be considered premeditated, or intentional at the very least. Even if the car’s response is based on general pattern recognition and global behavioral tendencies, rather than a situation-specific if-then rule, the decision concerning which maneuver to perform would still be deliberately made by the AI. By extension the car manufacturers who birthed the AI would then be held responsible for the decision.

Sacrificial moral dilemmas and the neuroanatomy of psychopaths

Let’s turn to the (in)famous trolley dilemma, which challenges the principles of ethics and the value of human life. It asks whether pulling a lever and guiding an out-of-control trolley to safety, saving its five passengers at the cost of killing one man or doing nothing, thus letting the five passengers perish, is the rational choice from a certain perspective (Cao et al., 2017). Those familiar with it will recognize the similarity between this classic and the traffic dilemma I just described. A number of studies have investigated personality differences in decision-making for such sacrificial moral dilemmas. For example, it appears that people with psychopathic traits are more likely to actively sacrifice the one person, regardless of whether this sacrifice was a means or a side effect of preserving the lives of more people (e.g. pushing a man on the tracks to halt the train thereby saving its passengers; or pushing a lever to redirect a train, thereby killing one person rather than letting five people get crushed) (Bartels & Pizarro, 2011; Glenn et al., 2009; Koenigs et al., 2012; Tassy, Pletti et al., 2016). In particular, it is claimed that amygdala and ventromedial prefrontal cortex dysfunctions are the root of discrepancies in moral decisions seen in individuals high in psychopathic traits (Blair, 2007). Because their amygdala does not aversely reinforce actions that harm others and they lack remorse, sacrificing a person is much less problematic for such individuals than it is for people who do not have psychopathic traits (Zijlmans, Marhe, Bevaart, et al., 2018).

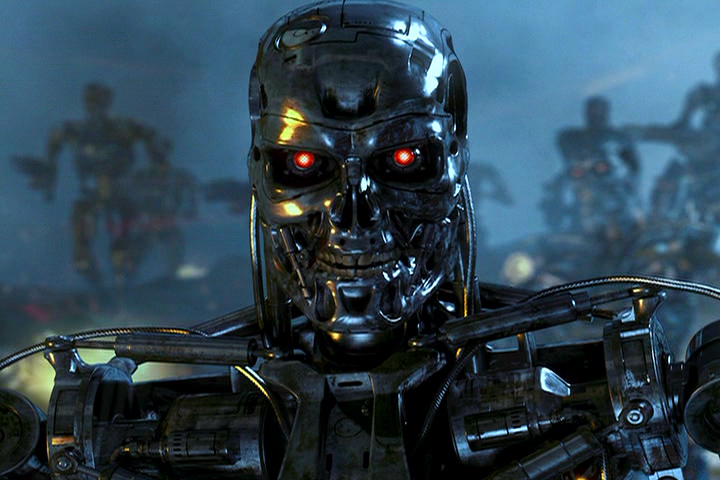

This raises some interesting questions. Should the AIs controlling the cars of the future be modeled after the minds of psychopaths, in the sense that the AI has the ability to make purely utilitarian decisions given special circumstances?

“Should the AIs controlling the cars of the future be modeled after the minds of psychopaths?”

Current developments at the RUG

One reason why I have a personal interest in this thought experiment, is that I’ve been conducting research on level III automation at the driving simulator in the Muntinggebouw at the University of Groningen, in cooperation with the BMW group. This level of automation still gives back control to the driver in critical situations, but otherwise performs all of the dynamic driving tasks (SAE International, 2018). It is meant to be a system allowing a transition from manual driving to fully self-driving vehicles. We’ve been investigating how safely people manage to regain control over their vehicle in different contexts after driving with automation for extended periods of time. Among other variables, we measured the time it took participants to perform an evasive maneuver and what kinds of maneuvers were performed in response to a critical situation. Effectively, at this more rudimentary stage of automation, the moral dilemma described above is irrelevant, as the human is still in charge of decision making during ambiguous situations. Eventually, however, this may change.

“Technology is rapidly advancing, and ultimately researchers are working towards the goal of building a fully autonomous vehicle.”

Ultimately, we also need to keep in mind that situations like the trolley dilemma are extraordinarily unlikely to happen, hence they are merely a hypothetical. As such, an argument can be made that car developers should invest in avoiding these kinds of situations, for example through constant monitoring of close and distant traffic (perhaps even including groups of pedestrians and the presence of concrete walls), or the use of early warning systems, rather than think excessively about how to deal with such rare and unlikely occurrences. Either way, technology is rapidly advancing, and ultimately researchers are working towards the goal of building a fully autonomous vehicle.

Whether sacrificing one for the benefit of many is ethical has been subject of debate in philosophy for ages. We could continue this debate in the hopes of somehow finding the perfect solution, but this is not what I am interested in. Much rather would I like to know how people will respond to a machine making such decisions. Should the cars of the future be psychopaths? I for one am still trying to think up a viable compromise, but have been unable to do so yet. The only thing I’m certain of is that perfecting the prevention systems should be priority number one. Ideally, a car shouldn’t need to be a psychopath. Let me know in the comments if you have a revolutionary idea!

Note: Image by Insomnia Cured Here, source: https://flic.kr/p/4gx5Pi (licensed under CC BY-SA 2.0)

References

Bartels, D. M., & Pizarro, D. A. (2011). The mismeasure of morals: Antisocial personality traits predict utilitarian responses to moral dilemmas. Cognition, 121, 154–161. doi: 10.1016/j.cognition.2011.05.010

Blair R. (2007). The amygdala and ventromedial prefrontal cortex in morality and psychopathy. Trends in Cognitive Sciences, 11, 387–392. doi: 10.1016/j.tics.2007.07.003

Cao, F., Zhang, J., Song, L., Wang, S., Miao, D., & Peng, J. (2017). Framing effect in the trolley problem and footbridge dilemma: Number of saved lives matters. Psychological Reports, 120, 88–101. doi:10.1177/0033294116685866

Glenn, A. L., Raine, A., Schug, R. A., Young, L. L., & Hauser, M. D. (2009). Increased DLPFC activity during moral decision-making in psychopathy. Molecular Psychiatry, 14, 909–911. doi: 10.1038/mp.2009.76

Koenigs, M., Kruepke, M., Zeier, J. D., & Newman, J. P. (2012). Utilitarian moral judgment in psychopathy. Social Cognitive and Affective Neuroscience, 7, 708–714. doi: 10.1093/scan/nsr048

Pletti, C. , Lotto, L. , Buodo, G. and Sarlo, M. (2017), It’s immoral, but I’d do it! Psychopathy traits affect decision‐making in sacrificial dilemmas and in everyday moral situations. British Journal of Psychology, 108, 351-368. doi:10.1111/bjop.12205

SAE International. (2018). Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. Retrieved from https://www.sae.org/standards/content/j3016_201806/

Zijlmans J, Marhe R, Bevaart F, et al. (2018) Neural correlates of moral evaluation and psychopathic traits in male multi-problem young adults. Frontiers in Psychiatry, 248. doi:10.3389/fpsyt.2018.00248

Thank you for this blog, Maximilian! I agree about the prevention issue. However, I can imagine that vehicle programmers, like human drivers, will have different ideas about what effective prevention is. There’s also the potential for a ‘tragedy of the commons’-like social dilemma. If all vehicle programmers make their cars careful, prescient, and social drivers in the interest of safety, a psychopathically programmed car might be able to exploit this traffic ecosystem and hence take its occupants to their destination much quicker than all those ‘suckers’ programmed to be Mister Nice Car. 😉